Parallel R Workflows: Welcome!

Introduction

Dr Anna Krystallli

R-RSE

https://r-rse-parallel-r.netlify.app

👋 Hello

me: Dr Anna Krystalli

Research Software Engineering Consultant,

r-rse- mastodon annakrystalli@fosstodon.org

- twitter @annakrystalli

- github @annakrystalli

- email r.rse.eu[at]gmail.com

Editor rOpenSci

Founder & Core Team member ReproHack

Objectives

In this course we’ll explore:

Understand general concepts and strategies to parallelisation.

R packages available for parallelising computation in R.

Focus on the

futureversecollection of packages.Deploying Parallel workflows on the Iridis cluster

Background

Computation

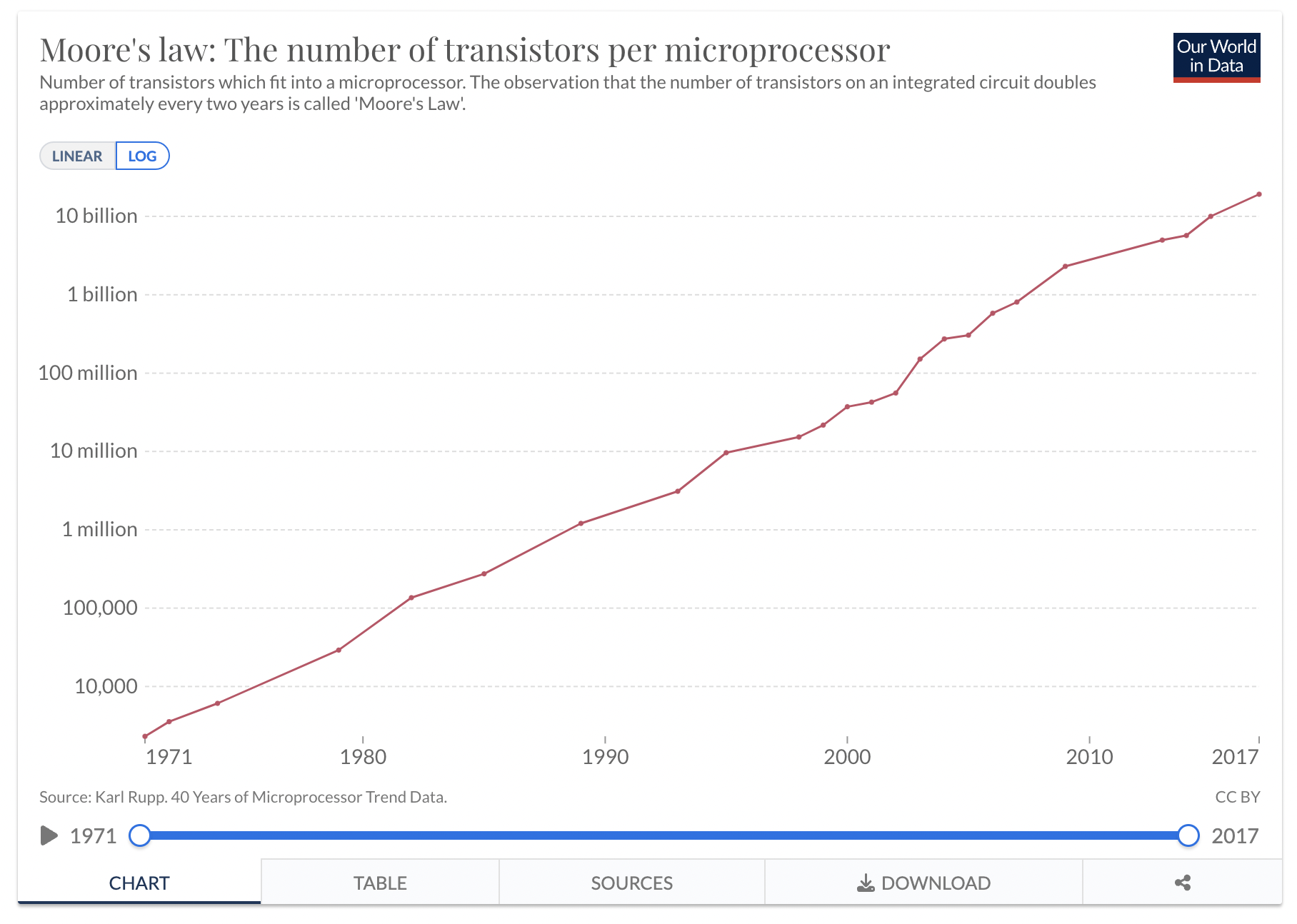

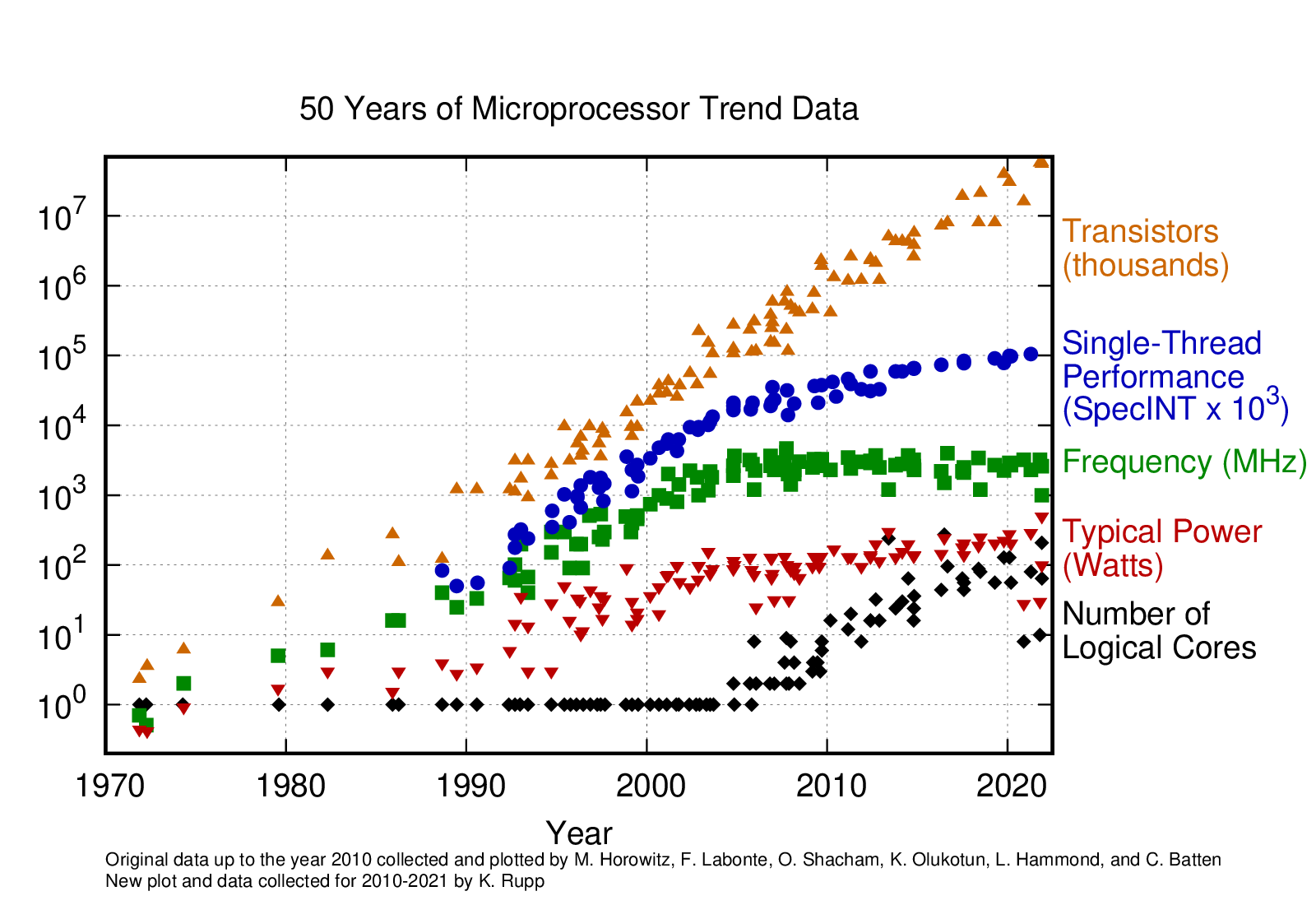

Moore’s law

Yet…

we’ve hit clock speed stagnation

About computer hardware

CPU (Processing)

RAM (memory)

Hard Disks, Networks (I/O)

About R

R is an interpreted language

Compiled Languages

Converted directly into machine code that the processor can execute.

Tend to be faster and more efficient to execute.

Need a “build” step which builds for system they are run on

Examples: C, C++, Erlang, Haskell, Rust, and Go

Interpreted Languages

Code interpreted line by line during run time.

significantly slower although just-in-time compilation is closing that gap.

much more compact and easy to write

Examples: R, Ruby, Python, and JavaScript.

R performance

R offers some excellent features: dynamic typing, lazy functional evaluation and object-orientation

Side effect: operations are undertaken in single-threaded mode, i.e. sequentially

Many routines in R are written in compiled languages like C & Fortran.

R performance can be enhanced by linking to optimised Linear Algebra Libraries.

Many packages wrap more performant C, Fortran, C++ code.

R offers many ways to parallelise computations.

About Parallel Computing

Types of parallel processing (problem types)

A single program, multiple data: all processors use the same program, though each has its own data. (Data parallelism)

Multiple programs, single data: each processors uses a different program on the same data. (Task parallelism)

Multiple programs, multiple data: each processors uses a different program and its own data. (Mixed Data and Task parallelism)

Types of parallel programming (architecture types)

Embarrassingly parallel: the simplest type of parallelism to implement where each task in a job is completely independent of the others. There is no communication required between tasks, which is what makes it easy to implement.

Shared-Memory Parallelism: when tasks are run on separate CPU-cores of the same computer. In other words, a single program can access many cores on one machine. CPU-cores share memory because they are on the same computer and all have access to the same memory card.

Distributed-memory parallelism: running tasks as multiple processes that do not share the same space in memory. This is one of the more complicated types of parallelism, since it requires a high level of communication between different tasks to ensure that everything runs properly.

About this course

I normally like to live code…BUT!

There’s a lot of materials to get through so I will be copying & pasting from the materials alot

Have the materials handy to follow along

Please stop me for questions or to share your own experiences

Lunch around 1pm

Let’s go!